Large language models (LLMs) present immense opportunities to advance drug discovery and development. The nature of the data being processed means that securing these systems is critical to avoid breaches, data leaks, or model manipulations.

LLMs are trained on vast amounts of data that make them capable of understanding and generating natural language content and performing various tasks.

As LLMs continue to evolve, they are becoming more prevalent in the life sciences industry. Whether used to investigate molecular folding or analyze source data for clinical trials, LLMs present immense opportunities to advance drug discovery and development.

However, with the increased usage of these advanced AI models comes a rise in cybersecurity concerns. The sensitive nature of the life sciences data being processed—coupled with the complexity of LLMs—means that securing these systems is critical to avoid breaches, data leaks, or model manipulations.

In this blog, we’ll explore three cybersecurity standards for LLMs and how they can be applied to threat models, development, and AI risk assessments.

Exploring Cybersecurity Standards for LLMs

It’s important to understand the frameworks that govern secure AI development and deployment before diving into their application and use. Three prominent models we’ll explore are:

- STRIDE threat modeling

- OWASP LLM Top 10 for secure LLM development practices

- NIST AI 100-1 for AI risk management

Threat Modeling: STRIDE

STRIDE identifies and assesses potential threats, which are then categorized as:

- Spoofing: Attackers impersonate users or systems to gain unauthorized access or to input malicious data.

- Tampering: Attackers compromise data integrity with unauthorized modification of data or system components.

- Repudiation: Attackers deny their actions and make it difficult to prove their involvement without proper logging and audit trails.

- Information Disclosure: Attackers expose sensitive information to unauthorized entities, which leads to data breaches or leaks.

- Denial of Service (DoS): Attackers overload systems with excessive requests or resource usage to cause service outages or slowdowns.

- Elevation of Privilege: Attackers gain higher levels of access or control to compromise system security.

After categorization, each threat is evaluated according to:

- Likelihood: The probability that a particular STRIDE threat will be exploited

- Impact: The consequences of the exploit (e.g., damage to assets, loss of data)

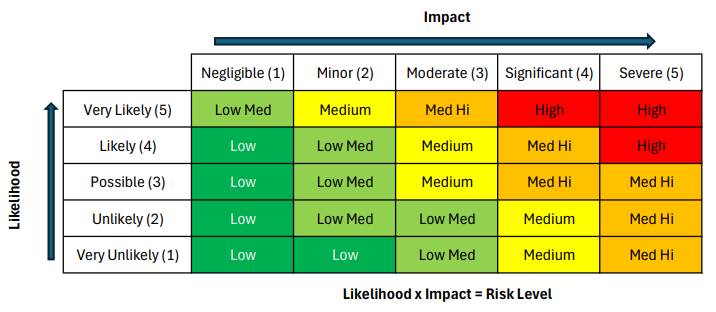

Determining the likelihood and impact of STRIDE threats can be visualized in a risk matrix.

Table 1 Risk matrix for STRIDE threats

Likelihood is assessed on a scale of 1 to 5, where 1 is “very unlikely” and 5 is “very likely.” Similarly, impact is rated on a scale of 1 to 5, where 1 indicates “negligible” and 5 indicates “severe” consequences if the threat materializes.

The overall risk level is determined by multiplying the likelihood and impact scores to determine whether the risk is low, low-medium, medium, medium-high, or high. This approach ensures that the most pressing threats are prioritized based on quantifiable risk scores.

For example, a threat rated 5 for Likelihood and 5 for Impact has an overall risk score of 25, which indicates a high risk that requires immediate attention. Conversely, a threat rated 1 for Likelihood and 2 for Impact has an overall risk score of 2, which represents a low risk and is a low priority for mitigation efforts.

Secure LLM Development: OWASP LLM Top 10

The Open Worldwide Application Security Project (OWASP) LLM Top 10 focuses on the most common and critical vulnerabilities associated with LLMs and offers a structured way to build secure LLM systems.

For those built to analyze clinical trial data or predict molecular folding, it’s imperative to adhere to these best practices.

- Prompt Injection: Carefully crafted inputs are used to alter an LLM’s intended behavior and lead to actions beyond the original design. These attacks can exploit system-level prompts and user-driven inputs that create unpredictable outputs.

- Insecure Output Handling: When LLM outputs are used without verification, they can expose systems to risks like code injection or unauthorized access. This makes backend systems vulnerable to a range of exploits.

- Training Data Poisoning: Manipulating the data used to train an LLM introduces vulnerabilities or biases into the model that may impact the reliability and trustworthiness of the model’s outputs.

- Model Denial of Service: LLMs can be targeted with requests that require excessive processing power and cause delays or interruptions in services and operations.

- Supply Chain Vulnerabilities: Using external data, models, or plugins during the LLM’s lifecycle may introduce hidden flaws that attackers can exploit.

- Sensitive Information Disclosure: LLMs may unintentionally reveal proprietary or confidential information during interactions. Such leaks can result in unauthorized access to sensitive data.

- Insecure Plugin Design: Plugins for LLMs can have design flaws that allow attackers to gain unintended access or control. Poorly secured plugins increase the potential attack surface of an LLM-based system.

- Excessive Agency: LLMs with too much autonomy may perform actions that were not anticipated by their designers. This can lead to unintended consequences, especially when interacting with other systems.

- Overreliance: Relying on LLMs for decision-making without proper scrutiny can result in the propagation of errors or inaccuracies and have serious implications.

- Model Theft: Unauthorized duplication or extraction of an LLM’s architecture compromises its proprietary nature. This may lead to loss of competitive advantage and potential exposure of intellectual property.

AI Risk Assessments: NIST AI 100-1

The National Institute of Standards and Technology (NIST) AI 100-1 is an AI risk management framework that introduces a lifecycle approach to managing AI systems securely. Its four key activities are to:

- Govern: Ensure that AI systems are built in compliance with industry standards and organizational goals.

- Map: Identify and document potential risks in the AI development, deployment, and use lifecycle.

- Measure: Quantify the risks and impacts posed by vulnerabilities and risks to prioritize action.

- Manage: Implement and monitor controls to mitigate identified risks.

Life sciences companies must adopt an AI lifecycle to ensure that LLMs processing critical data—such as clinical trial results or molecular structures—are resilient to cybersecurity threats and remain compliant with regulations like the Health Insurance Portability and Accountability Act (HIPAA) and 21 CFR Part 11.

Delving into Practical Examples: Gene Prioritization and Selection

When you leverage LLMs for gene prioritization (ranking genes on the probability of their association with a particular disease), the opportunities for clinical advancements are immense, but so are the cybersecurity risks.

Let’s look at how to secure LLM-based workflows in knowledge-driven gene selection using the STRIDE, OWASP LLM Top 10, and NIST AI 100-1 cybersecurity standards.

STRIDE Threat Model

The STRIDE threat model identifies potential security threats when deploying LLMs for gene prioritization. The security threats in Table 2 help prioritize genes like ALAS2 and BCL2L1 using LLMs.

Note that these are mock threats to demonstrate how an effective LLM threat model might be constructed. Also note that a threat model would need to include each LLM workflow and interface to be effective.

Numbers in the last three columns represent likelihood, impact, and overall risk. See Table 1 for explanations of ratings.

| Threat Example in Gene Prioritization Workflow | STRIDE Component | Assets Impacted | Likelihood | Impact | Overall Risk |

| An attacker gains unauthorized access to the LLM and provides input that falsely prioritizes or deprioritizes genes. | Spoofing | Gene prioritization outputs for ALAS2 and BCL2L1 | 3 | 4 | 12 |

| A malicious modification to the BloodGen3 dataset causes the LLM to deprioritize key genes like BCL2L1. | Tampering | Genomic data used in LLM training | 2 | 5 | 10 |

| An attacker modifies LLM-generated outputs but denies responsibility, which leads to errors in gene prioritization. | Repudiation | Gene prioritization records | 3 | 4 | 12 |

| Data related to ALAS2 and BCL2L1 is inadvertently exposed through insecure LLM outputs, which compromises intellectual property. | Information Disclosure | Proprietary data on gene function and prioritization | 4 | 5 | 20 |

| A DoS attack prevents real-time gene prioritization and delays identification of therapeutic candidates like ALAS2. | Denial of Service (DoS) | Gene prioritization service availability | 3 | 3 | 9 |

| An attacker with elevated privileges alters the LLM’s algorithms to misprioritize genes. | Elevation of Privilege | LLM’s internal decision-making process | 2 | 5 | 10 |

Table 2 STRIDE threat model using mock threats as examples

OWASP LLM Top 10

To secure LLM development for gene prioritization, it’s essential to address the OWASP LLM Top 10 vulnerabilities. In the following example, mock threats demonstrate how an effective LLM OWASP assessment might be constructed. Also note that an assessment would need to include each LLM workflow and interface to be effective.

| Number | Description | Example | Mitigation |

| LLM01 | Prompt Injection | Malicious prompts mislead the LLM, which alter gene prioritization results for genes like ALAS2. | Implement prompt validation and input sanitization to block malicious inputs. |

| LLM02 | Insecure Output Handling | LLM outputs sensitive genomic data related to ALAS2 or BCL2L1 without proper sanitization. | Employ proper output handling protocols to prevent sensitive data leakage. |

| LLM03 | Training Data Poisoning | Corrupted training data results in incorrect gene selection for therapeutic research. | Use verified datasets and implement data integrity checks. |

| LLM04 | Model Denial of Service | Flooding the LLM with excessive requests, which leads to delayed processing of gene prioritization. | Implement rate-limiting and resource monitoring to prevent DoS attacks. |

| LLM05 | Supply Chain Vulnerabilities | Third-party components used to train or deploy the LLM are compromised. | Regularly assess and update third-party components for security vulnerabilities. |

| LLM06 | Sensitive Information Disclosure | Sensitive genomic data, such as biomarkers, are exposed through the LLM’s outputs. | Use encryption and data anonymization to protect sensitive genomic information. |

| LLM07 | Insecure Plugin Design | Insecurely designed plugins or integrations that compromise the LLM’s ability to prioritize genes. | Ensure secure plugin design with rigorous security reviews. |

| LLM08 | Excessive Agency | The LLM operates outside of its intended scope and misclassifies genes like ALAS2. | Define clear operational boundaries and apply strict oversight on LLM functionality. |

| LLM09 | Overreliance | Overdependence on unverified external data sources for gene prioritization. | Restrict the use of external data to trusted and verified sources. |

| LLM10 | Model Theft | Unauthorized access to the LLM model exposes proprietary gene prioritization algorithms. | Secure access to the LLM model with robust access control mechanisms. |

Table 2 STRIDE threat model using mock threats as examples

Note that the processes and questions required to build this table are complex. Here’s an example from USDM’s LLM OWASP assessment:

|

Number |

Description | Potential Bad Actions |

Questions |

|

LLM01 |

Prompt Injection |

Malicious user injects prompts to extract sensitive information; websites exploit plugins for scams. |

How are you controlling privileges and applying role-based permissions for LLM use? |

| Do you require human approval for privileged actions initiated by the LLM? | |||

| How do you separate untrusted content from user prompts? | |||

| What methods do you use to visually highlight unreliable LLM responses? |

NIST AI 100-1

Risk assessment is essential to risk governance for the LLM. Following is a high-level overview of how to apply risk standards; there are more than 70 controls in total.

1. Govern: Cultivate a culture of risk management

Governance ensures adherence to regulations and standards such as Good Data Protection Regulation (GDPR), HIPAA, and 21 CFR Part 11, especially when the AI system handles sensitive information.

Key governance actions:

- Regulatory compliance: Ensure that all LLM workflows comply with relevant regulations, particularly when processing sensitive data like personal or clinical records.

- Data governance: Clearly document how data is accessed, stored, and processed to ensure transparency and accountability.

- Trustworthy AI systems: Establish internal policies that promote transparency and fairness in how the LLM processes data and generates results. Ensure outputs are accurate, reproducible, and traceable.

2. Map: Identify risks related to context

Mapping risks help you understand the end-to-end workflow of the LLM—from ingesting data to generating output. Security vulnerabilities could arise in data management and user interactions.

Key mapping actions:

- Data ingestion: Ensure that the security and integrity of data ingested by the LLM prevents tampering or corruption.

- Model training: Ensure that training datasets are reliable, unbiased, and secure to help guard against malicious actors who might inject poisoned data.

- User interactions: Log and monitor all interactions between users and the LLM to prevent unauthorized access and manipulation of sensitive outputs.

3. Measure: Assess, analyze, and track risks

Measuring the risks associated with LLM deployment is key to understanding how vulnerabilities might be exploited and their potential impact. These measurements help prioritize risk mitigation strategies.

Key measurement actions:

- Likelihood of data tampering: Assess how likely it is for training or input data to be altered, which could compromise the outputs of the LLM.

- Impact of data leaks or model inversion: Determine the severity of potential breaches, particularly if sensitive or proprietary data is exposed.

- Likelihood of adversarial input: Assess how likely it is for adversarial inputs to manipulate the LLM’s responses and lead to incorrect results in critical applications.

4. Manage: Prioritize risks based on project impact

Ongoing management of identified risks is critical to maintaining the integrity of AI systems. Continuous monitoring allows for the timely detection of anomalies or security incidents that may compromise the LLM’s outputs.

Key management actions:

- System activity: Log and monitor all user actions and system processes, especially when handling sensitive data.

- Anomaly detection: Implement AI-driven tools to detect unusual patterns or deviations in the LLM’s performance that could indicate security threats.

- Incident management: Develop and implement a response plan to quickly address any identified cybersecurity incidents to ensure minimal disruption to workflows and protect data integrity.

Adopting GxP Standards in AI Risk Assessments

USDM enhances the NIST AI 100-1 framework by encompassing Good Practice (GxP) standards and providing additional value to life sciences companies.

Key GxP actions:

- Regulatory Compliance and Data Integrity: We ensure that your AI system meets robust GxP guidelines by maintaining secure audit trails, data confidentiality, and traceability. Our experts provide comprehensive documentation for development, deployment, and maintenance that align with regulatory requirements.

- Validation, Verification, and Change Management: We implement rigorous validation and verification processes for reliability and accuracy in AI models. Change management protocols are thoroughly documented to maintain compliance as the system evolves.

- Risk Management and Training: We establish risk management strategies for product quality and patient safety. Our comprehensive training programs ensure that your teams understand relevant GxP principles and practices.

- Vendor and Third-Party Management: We apply rigorous risk assessment and management practices for third-party AI solutions to ensure alignment with your organization’s GxP compliance framework.

- Ethical Considerations and Continuous Improvement: We continuously monitor and improve your AI systems with a focus on ethical considerations like patient confidentiality and fairness.

Secure Your AI: Expert Services for LLM and AI Risk Management

To ensure the safety, compliance, and integrity of your AI solutions, USDM Life Sciences offers tailored services to address the unique challenges of LLMs and AI systems:

- LLM Threat Modeling: We identify potential vulnerabilities in your LLM workflows and implement custom security measures to safeguard your data and processes.

- LLM Secure Development Assessment: Using the OWASP LLM Top 10 as a foundation, our assessments ensure your AI systems are secure and compliant. We help your organization identify risks, mitigate vulnerabilities, and protect sensitive information throughout every stage of LLM development.

- AI Risk Assessments: We assess risks related to data privacy, model governance, and LLM deployment to ensure your AI systems stay compliant and resilient in the face of evolving threats.

Take the next step in securing your AI innovations. Contact USDM today to learn how our expertise in AI risk assessment and LLM vulnerability identification help your AI systems remain secure, compliant, and resilient.

Proper access controls are critical in clinical data—see how organizations can manage risk and protect sensitive information. Watch the clip to learn more. Continue watching the full webinar here.