Learn why the prevalence and sophistication of deepfake technology in fraud attempts have increased significantly in recent years.

What is a Deepfake?

A deepfake is an image or recording that has been convincingly altered and manipulated to misrepresent someone as doing or saying something that was not actually done or said (Merriam-Webster, 2024). A massive surge in recent deepfake fraud attempts is largely attributed to generative AI (GenAI) tools.

Accessible and relatively inexpensive tools are used to make “cheapfakes,” which are realistic synthetic media deployed en masse. They aim for volume, not deepfake quality, to maximize the probability of a successful breach.

The ability of deepfakes to convincingly mimic individuals is especially concerning in environments where trust and verification are critical.

According to 2023 statistics from Business Wire, the proportion of deepfakes relative to all fraud types jumped from 0.2% to 2.6% in North America and from 0.1% to 4.6% in Canada. Infosecurity Magazine cites a 450% increase in deepfake use for identity fraud in the Middle East and Africa. These startling statistics underscore a global trend toward GenAI tools being used for this purpose.

How is a Deepfake Created?

A technology used to create deepfakes and other synthetic media is known as a Generative Adversarial Network (GAN), which uses a generator and a discriminator. Both are machine learning models that work together through a unique process.

The generator creates content—such as images or videos—and attempts to mimic real-life examples. The content is then passed to the discriminator to evaluate how closely the content resembles the real data it’s trained to authenticate. If the discriminator identifies the content as being artificial or fake, it rejects it.

This feedback is used by the generator to improve its next attempt at creating realistic content. The cycle continues until the discriminator cannot distinguish the fake content from real data, effectively approving the content as realistic.

GANs are used to enhance realism in video games and generate audiovisual media for films. However, its ability to create highly convincing fake media brings about significant risk, especially in the context of financial fraud.

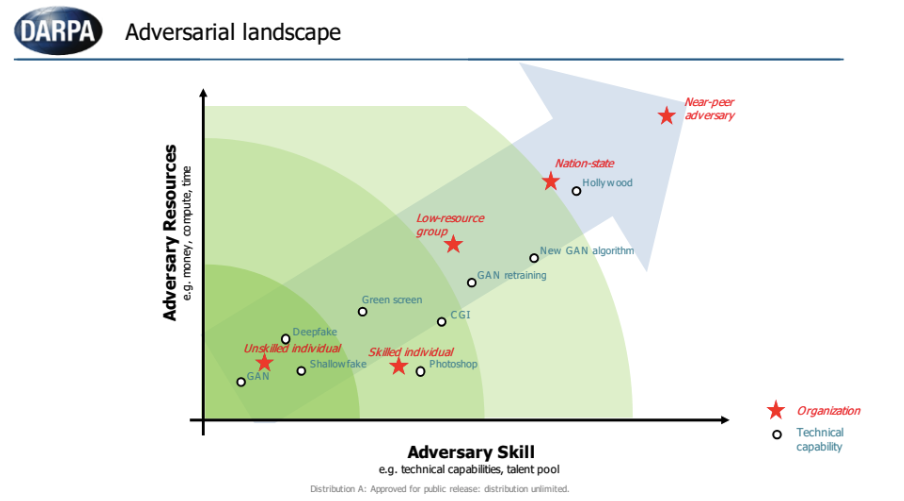

The likelihood of successful deepfakes will increase as the cost of resources used to produce them decrease. This chart from the Defense Advanced Research Projects Agency (DARPA) shows that GANs don’t require much in terms of technical capabilities or money.

Image source: Increasing Threat of Deepfake Identities, Department of Homeland Security (DHS).

Reframing Truth to Spot the Lies

The primary threat of deepfakes comes from our natural inclination to believe what we see. How many times have you said or heard, “pictures or it didn’t happen”? We correlate photos to reality because “pictures don’t lie.” That’s no longer true.

Frighteningly, deepfakes and synthetic media don’t have to be particularly advanced to succeed in spreading misleading or deliberately false information. To understand what threats might look like and how they play out, you can search online for scenarios specific to any industry.

Deepfakes are often used for high-stakes financial fraud, but they’re also used to steal the identities of real people and create false documents, accounts, videos, and voice recordings. These threats can impact various areas, including:

- R&D, PII, and PHI: Competitors may use deepfake videos or voice recordings to impersonate high-level executives or scientists within a company and conduct fake meetings or calls to extract sensitive information about ongoing research and development (R&D) projects, personally identifiable information (PII), and protected health information (PHI).

- Clinical Trial Data Integrity: Fraudsters may create deepfake videos or voice messages that impersonate clinical trial sponsors, such as representatives from pharmaceutical companies. These fake communications deliver false instructions to clinical research teams that could potentially modify trial protocols or data reporting procedures and further the fraudsters’ deceptive agendas.

- Intellectual Property: Competitors or malicious actors may employ deepfake technology to impersonate senior executives or researchers from other organizations. They propose partnerships to extract sensitive research data or intellectual property during the supposed “collaboration.”

There’s no definitive solution for fending off deepfakes, but education and regulations are significant mitigation measures. Keep in mind the following when talking to a person requesting a financial transaction:

- Requests that convey a sense of urgency are often red flags. Rarely is it urgent to respond to a transaction request immediately. If you think the person is suspicious, it’s acceptable to say, “Let me take your name and number and I’ll get back to you.”

- Requests for sensitive information or to execute a significant financial transaction—even if they appear to come from an internal source—should still be verified through a secondary contact in the company, such as a supervisor or someone in the same department.

- Check the caller ID against known internal numbers and confirm the call-back number with an official company directory. Never use information provided by the caller to verify their authenticity.

Your organization should also establish thresholds for further verification. For example:

Transactions less than $5,000:

- Require approval by the employee’s direct manager and the head of a business unit to ensure oversight and accountability.

Transactions from $5,000 to $20,000:

- Require approval from the head of a business unit and the chief financial officer (CFO).

- Require a secondary review by a compliance or audit team member for new and recurring transactions.

Transactions from $20,000 to $100,000:

- Require approval from the CFO and at least two other members of the executive team (or their delegates).

- Require a secondary review by a compliance or audit team member for new and recurring transactions, especially if they are high-risk or unusual.

- Implement conditional approvals based on meeting specific criteria, such as budget availability or completing a due diligence process.

Transactions over $100,000:

- Require approval of the CEO and board of directors.

- Consider requiring an external review or audit before approving exceptionally large transactions.

- Require that approvals from the CEO and the board are recorded in meeting minutes to ensure accountability and transparency.

At every level, conduct randomized transaction audits to detect and deter potential fraud. Rotate approvers to prevent collusion.

The Role of a Chief Information Security Officer

Chief Information Security Officers (CISOs) help defend against sophisticated threats like deepfakes. They develop and implement comprehensive security strategies to protect an organization’s information and assets.

In the context of deepfakes, CISOs employ advanced detection technologies and establish rigorous verification protocols to mitigate risks. Using AI-driven tools, CISOs identify anomalies and authenticate media to swiftly detect and neutralize deepfake attempts.

Their proactive approach safeguards against financial fraud, maintains the integrity of sensitive information, and upholds organizational trust.

USDM’s virtual Chief Information Security Officer (vCISO) services offer extensive expertise in mitigating the risks associated with sophisticated digital threats, including deepfakes. We enhance your existing cybersecurity measures and provide essential education on emerging threats.

Contact us to discuss your security needs and protect your organization with robust and adaptive defenses.